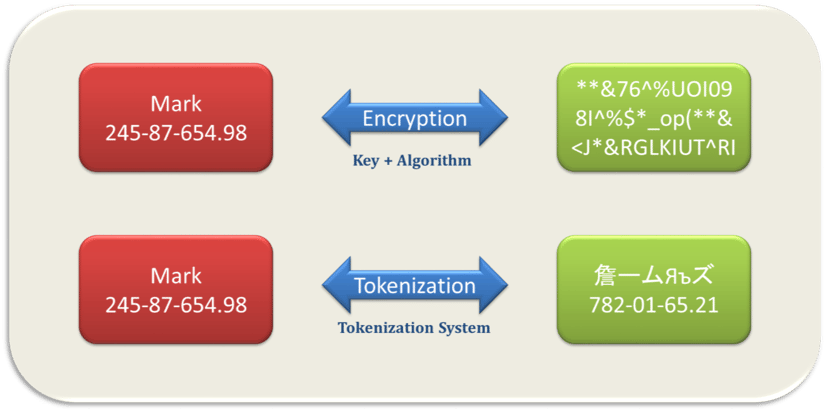

Tokenization is the process of replacing a sensitive data element with a random equivalent, referred to as a token that has no extrinsic or exploitable meaning. The token is a reference that maps back to the original sensitive data through a tokenization system. Both original sensitive data and token are stored encrypted in a secure database. Reversing this process by replacing the token by the correct original sensitive data is de-tokenization.

Encryption is defined as "the process of transforming information using an algorithm to make it unreadable to anyone except those possessing special knowledge, usually referred to as a key." It is the process of scrambling sensitive data by using a mathematical algorithm that can only be reversed using the right "key" (string of text). In most cases, the encrypted data has no relation to the original one. To decrypt the message, you need the encrypted data, the algorithm, and the key to recover the data. Depending on the strength of the algorithm and the length of the key, using the proper Cryptanalysis techniques you can reveal the algorithm and the key and break the encryption.

Many different approaches exist for creating tokens but, in all cases, the generated token should not be linked mathematically to the original data value. Since there is no mathematical relationship between the original value and its token, there is practically no way by which a token can be ‘deciphered’ using any Cryptanalysis techniques.

Organizations can use tokenization to comply with the requirements of various regulatory requirements such as those demanded by HIPAA, HITECH, PCI DSS, CJIS, GLBA and ITAR besides others. With tokenization, companies can ensure that their sensitive data stays under their control and only tokenized data ever travels out into a public network or to cloud-based services. Increasingly, the use of tokens is being accepted as a standard method of ensuring data privacy and security.

The Payment Card Industry has published its guidelines for tokenization. This is a good starting document to get acquainted with what tokenization is and how it can be used to secure data. Implementing these guidelines is a tried and tested method of ensuring data security.

The Differences between Tokenization and Encryption

Encryption is a mathematical operation that uses an algorithm and a key to converting sensitive data to an encrypted value. The encryption key is used to convert the encrypted data back to clear text.

While encryption can provide very strong protection, there is always a mathematical link between the original data and the encrypted values, which can be discovered using Cryptanalysis techniques. With a tokenization scheme, there is no such link.

You cannot reverse a token back to its original value by any mathematical operation. To convert a token back to its original value, you need to access a secure lookup table. The table and the data are stored encrypted in a secure location behind firewalled and kept in a hardened database.

Tokens are made with the same structure and data type as the original data. This ensures that tokenized data will not break application functionality. To some extent, this can also be achieved by encryption that is “format preserving”, but the underlying weakness of reversibility, if the key and algorithm is known, will remain.

Tokenization removes the privacy aspect from your data.

Since there is no way to reverse mathematically a token back to its original value, tokenization has come to be a preferred approach to meeting data residency requirements. Many countries have enacted laws that demand that sensitive data and private information must remain within a specified geographical area. These laws are called data residency, and these will become more restrictive and explicit with time.

Data tokenization solution provides an elegant and efficient solution to data residency, data sovereignty, privacy and security challenges to permit enterprises to use cloud services. It is simply turn’s private data to Gibberish that has no relation to the original data. This turns the private data into public data that has no relation to any specific individual or subject. Only tokenized data is moved to the premises of cloud service providers. This allows organizations to comply with the law while benefiting from cloud-based processing and storage capabilities.

CloudMask data tokenization solution provides a strong tokenization by continuously changing the token lookup tables. For example, if you will send a word, such as “Hi world”, a hundred time in sequence, you will notice that CloudMask will generate a hundred different words. in addition the look-up table itself is encrypted.

With CloudMask, only your authorized parties can decrypt and see your data. Not hackers with your valid password, Not Cloud Providers, Not Government Agencies, and Not even CloudMask can see your protected data. Twenty-six government cybersecurity agencies around the world back these claims.

Watch our video and demo at www.vimeo.com/cloudmask

![]()